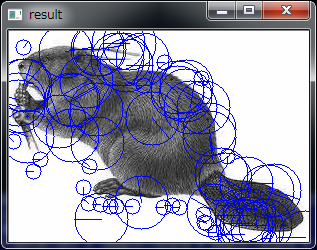

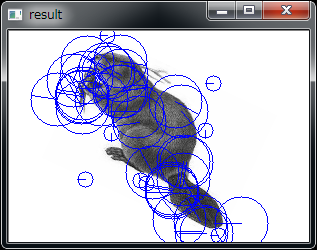

いろんな事情により画像処理の練習をしようと思ってSIFTを組んでみたのですが、

プログラムにマッチングさせるまでもなくいろいろとおかしいです。

いろいろと調べて間違ってるところを直さないと・・・

TemplateGestureDetector gd = new TemplateGestureDetector(null);

gd.StartRecordTemplate()

for (i = 0 ; i < n ; i++) {

gd.add(position , kinect.SkeletonEngine);

}

gd.EndRecordTemplate()

gd.SaveState(stream)

using System;

using System.Collections.Generic;

using System.ComponentModel;

using System.Data;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Windows.Forms;

using Microsoft.Research.Kinect.Nui;

namespace Kinect_Residualimage

{

public partial class Form1 : Form

{

//画面表示用

Bitmap Texture;

//Kinectの変数

Runtime nui;

const int ALPHA_IDX = 3;

const int RED_IDX = 0;

const int GREEN_IDX = 1;

const int BLUE_IDX = 2;

byte[] colorFrame32 = new byte[640 * 480 * 4];

Graphics Graphi;

public Form1()

{

InitializeComponent();

}

private void Form1_Load(object sender, EventArgs e)

{

Texture = new Bitmap(640, 480, System.Drawing.Imaging.PixelFormat.Format32bppArgb);

//Kinectの初期化

nui = new Runtime();

try

{

nui.Initialize(RuntimeOptions.UseColor);

}

catch (InvalidOperationException)

{

Console.WriteLine("Runtime initialization failed. Please make sure Kinect device is plugged in.");

return;

}

//video streamのOpenをする

try

{

nui.VideoStream.Open(ImageStreamType.Video, 2, ImageResolution.Resolution640x480, ImageType.Color);

}

catch (InvalidOperationException)

{

Console.WriteLine("Failed to open stream. Please make sure to specify a supported image type and resolution.");

return;

}

nui.VideoFrameReady += new EventHandler<ImageFrameReadyEventArgs>(nui_VideoFrameReady);

pictureBox1.Image = new Bitmap(pictureBox1.Width, pictureBox1.Height);

Graphi = Graphics.FromImage(pictureBox1.Image);

}

void nui_VideoFrameReady(object sender, ImageFrameReadyEventArgs e)

{

PlanarImage Image = e.ImageFrame.Image;

byte[] converted = convertColorFrame(Image.Bits, (byte)50);

//画像の作成

unsafe {

fixed (byte* ptr = &converted[0]) {

Texture = new Bitmap(Image.Width , Image.Height , Image.Width * 4 , System.Drawing.Imaging.PixelFormat.Format32bppArgb , (IntPtr)ptr);

}

}

Graphi.DrawImage(Texture, 0, 0);

pictureBox1.Refresh();

}

//kinectから取り込んだColorFrameのフォーマットを直す

byte[] convertColorFrame(byte[] colorFrame , byte alpha)

{

for (int i = 0; i < colorFrame32.Length; i += 4)

{

colorFrame32[i + ALPHA_IDX] = alpha;

colorFrame32[i + RED_IDX] = colorFrame[i + 0];

colorFrame32[i + GREEN_IDX] = colorFrame[i + 1];

colorFrame32[i + BLUE_IDX] = colorFrame[i + 2];

}

return colorFrame32;

}

private void Form1_FormClosing(object sender, FormClosingEventArgs e)

{

nui.Uninitialize();

Environment.Exit(0);

}

}

}

using System;

using System.Collections.Generic;

using System.Linq;

using System.Windows;

using Microsoft.Xna.Framework;

using Microsoft.Xna.Framework.Audio;

using Microsoft.Xna.Framework.Content;

using Microsoft.Xna.Framework.GamerServices;

using Microsoft.Xna.Framework.Graphics;

using Microsoft.Xna.Framework.Input;

using Microsoft.Xna.Framework.Media;

using Microsoft.Research.Kinect.Nui;

namespace XNAFirst

{

/// <summary>

/// This is the main type for your game

/// </summary>

public class Game1 : Microsoft.Xna.Framework.Game

{

GraphicsDeviceManager graphics;

SpriteBatch spriteBatch;

//Kinectの変数

Runtime nui;

int totalFrames = 0;

int lastFrames = 0;

DateTime lastTime = DateTime.MaxValue;

//テクスチャ

private Texture2D texDepth = null;

private Texture2D texBorn = null;

private Texture2D texImage = null;

const int ALPHA_IDX = 3;

const int RED_IDX = 0;

const int GREEN_IDX = 1;

const int BLUE_IDX = 2;

byte[] depthFrame32 = new byte[320 * 240 * 4];

byte[] colorFrame32 = new byte[640 * 480 * 4];

public Game1()

{

graphics = new GraphicsDeviceManager(this);

Content.RootDirectory = "Content";

graphics.PreferredBackBufferWidth = 640;

graphics.PreferredBackBufferHeight = 480;

}

protected override void Initialize()

{

//kinectの初期化

nui = new Runtime();

try {

nui.Initialize(RuntimeOptions.UseDepthAndPlayerIndex | RuntimeOptions.UseSkeletalTracking | RuntimeOptions.UseColor);

} catch (InvalidOperationException) {

System.Windows.MessageBox.Show("Runtime initialization failed. Please make sure Kinect device is plugged in.");

return;

}

//video stream と depth stream のOpenをする

try {

nui.VideoStream.Open(ImageStreamType.Video , 2 , ImageResolution.Resolution640x480 , ImageType.Color);

nui.DepthStream.Open(ImageStreamType.Depth , 2 , ImageResolution.Resolution320x240 , ImageType.DepthAndPlayerIndex);

} catch (InvalidOperationException) {

System.Windows.MessageBox.Show("Failed to open stream. Please make sure to specify a supported image type and resolution.");

return;

}

//FPS制御のための時間取得

lastTime = DateTime.Now;

//イベント駆動以外でframeを取得したいのでここはちょっと保留

nui.DepthFrameReady += new EventHandler<ImageFrameReadyEventArgs>(nui_DepthFrameReady);

nui.SkeletonFrameReady += new EventHandler<SkeletonFrameReadyEventArgs>(nui_SkeletonFrameReady);

nui.VideoFrameReady += new EventHandler<ImageFrameReadyEventArgs>(nui_VideoFrameReady);

base.Initialize();

}

void nui_VideoFrameReady(object sender, ImageFrameReadyEventArgs e)

{

//32bit per pixel RGBA image

PlanarImage Image = e.ImageFrame.Image;

texImage = new Texture2D(this.GraphicsDevice, Image.Width, Image.Height , false , SurfaceFormat.Color);

byte[] converted = convertColorFrame(Image.Bits);

texImage.SetData(converted);

}

void nui_SkeletonFrameReady(object sender, SkeletonFrameReadyEventArgs e)

{

//とりあえず保留

}

void nui_DepthFrameReady(object sender, ImageFrameReadyEventArgs e)

{

PlanarImage Image = e.ImageFrame.Image;

texDepth = new Texture2D(this.GraphicsDevice, Image.Width, Image.Height , false , SurfaceFormat.Color);

//byteデータで取得

//depthバッファを見やすくする

byte[] convertedDepthFrame = convertDepthFrame(Image.Bits);

//byteデータから画像orスプライトを作成する

texDepth.SetData(convertedDepthFrame);

totalFrames++;

//fps測定

DateTime cur = DateTime.Now;

if (cur.Subtract(lastTime) > TimeSpan.FromSeconds(1)) {

int frameDiff = totalFrames - lastFrames;

lastFrames = totalFrames;

lastTime = cur;

}

}

protected override void LoadContent()

{

// Create a new SpriteBatch, which can be used to draw textures.

spriteBatch = new SpriteBatch(GraphicsDevice);

// TODO: use this.Content to load your game content here

}

protected override void UnloadContent()

{

nui.Uninitialize();

}

protected override void Update(GameTime gameTime)

{

// Allows the game to exit

if (GamePad.GetState(PlayerIndex.One).Buttons.Back == ButtonState.Pressed)

this.Exit();

// TODO: Add your update logic here

base.Update(gameTime);

}

protected override void Draw(GameTime gameTime)

{

GraphicsDevice.Clear(Color.White);

this.spriteBatch.Begin();

this.spriteBatch.Draw(texImage, new Rectangle(0, 0, graphics.PreferredBackBufferWidth, graphics.PreferredBackBufferHeight), Color.White);

//this.spriteBatch.Draw(texDepth, new Rectangle(0, 0, graphics.PreferredBackBufferWidth, graphics.PreferredBackBufferHeight), Color.White);

this.spriteBatch.End();

base.Draw(gameTime);

}

//kinectから取り込んだColorFrameをXNAのColorフォーマットに直す

byte[] convertColorFrame(byte[] colorFrame)

{

for (int i = 0 ; i < colorFrame32.Length ; i += 4) {

colorFrame32[i + ALPHA_IDX] = 255;

colorFrame32[i + RED_IDX] = colorFrame[i + 2];

colorFrame32[i + GREEN_IDX] = colorFrame[i + 1];

colorFrame32[i + BLUE_IDX] = colorFrame[i];

}

return colorFrame32;

}

// Converts a 16-bit grayscale depth frame which includes player indexes into a 32-bit frame

// that displays different players in different colors

//XNAのテクスチャーではColorフォーマットを使うことを想定している

byte[] convertDepthFrame(byte[] depthFrame16)

{

for (int i16 = 0, i32 = 0; i16 < depthFrame16.Length && i32 < depthFrame32.Length; i16 += 2, i32 += 4)

{

int player = depthFrame16[i16] & 0x07;

int realDepth = (depthFrame16[i16 + 1] << 5) | (depthFrame16[i16] >> 3);

// transform 13-bit depth information into an 8-bit intensity appropriate

// for display (we disregard information in most significant bit)

byte intensity = (byte)(255 - (255 * realDepth / 0x0fff));

depthFrame32[i32 + ALPHA_IDX] = 255;

depthFrame32[i32 + RED_IDX] = 0;

depthFrame32[i32 + GREEN_IDX] = 0;

depthFrame32[i32 + BLUE_IDX] = 0;

// choose different display colors based on player

switch (player)

{

case 0:

depthFrame32[i32 + RED_IDX] = (byte)(intensity / 2);

depthFrame32[i32 + GREEN_IDX] = (byte)(intensity / 2);

depthFrame32[i32 + BLUE_IDX] = (byte)(intensity / 2);

break;

case 1:

depthFrame32[i32 + RED_IDX] = intensity;

break;

case 2:

depthFrame32[i32 + GREEN_IDX] = intensity;

break;

case 3:

depthFrame32[i32 + RED_IDX] = (byte)(intensity / 4);

depthFrame32[i32 + GREEN_IDX] = (byte)(intensity);

depthFrame32[i32 + BLUE_IDX] = (byte)(intensity);

break;

case 4:

depthFrame32[i32 + RED_IDX] = (byte)(intensity);

depthFrame32[i32 + GREEN_IDX] = (byte)(intensity);

depthFrame32[i32 + BLUE_IDX] = (byte)(intensity / 4);

break;

case 5:

depthFrame32[i32 + RED_IDX] = (byte)(intensity);

depthFrame32[i32 + GREEN_IDX] = (byte)(intensity / 4);

depthFrame32[i32 + BLUE_IDX] = (byte)(intensity);

break;

case 6:

depthFrame32[i32 + RED_IDX] = (byte)(intensity / 2);

depthFrame32[i32 + GREEN_IDX] = (byte)(intensity / 2);

depthFrame32[i32 + BLUE_IDX] = (byte)(intensity);

break;

case 7:

depthFrame32[i32 + RED_IDX] = (byte)(255 - intensity);

depthFrame32[i32 + GREEN_IDX] = (byte)(255 - intensity);

depthFrame32[i32 + BLUE_IDX] = (byte)(255 - intensity);

break;

}

}

return depthFrame32;

}

}

}

#include <iostream>

#include <vector>

#include <map>

#include <set>

#include <string.h>

using namespace std;

struct xy {

int first;

int second;

};

xy presets1[1000];

xy input[1000];

int main(void)

{

int n;

while (1) {

cin >> n;

if (n == 0) {

break;

}

memset(presets1 , 0 , sizeof(presets1));

memset(input , 0 , sizeof(input));

int m;

cin >> m;

for (int i = 0 ; i < m ; i++) {

cin >> presets1[i].first >> presets1[i].second;

}

for (int i = 0 ; i < n ; i++) {

int check;

cin >> check;

for (int j = 0 ; j < check ; j++) {

cin >> input[j].first >> input[j].second;

}

if (m != check) {

continue;

}

for (int k = 0 ; k < 4 ; k++) {

int ix0 = input[0].first;

int iy0 = input[0].second;

int px0 = presets1[0].first;

int py0 = presets1[0].second;

for (int j = 0 ; j < check ; j++) {

if (presets1[j].first - px0 != input[j].first - ix0 || presets1[j].second - py0 != input[j].second - iy0) {

goto NEXT;

}

}

cout << i + 1 << endl;

goto END;

NEXT:;

px0 = presets1[check - 1].first;

py0 = presets1[check - 1].second;

for (int j = 0 ; j < check ; j++) {

if (presets1[check - j - 1].first - px0 != input[j].first - ix0 || presets1[check - j - 1].second - py0 != input[j].second - iy0) {

goto NEXT2;

}

}

cout << i + 1 << endl;

goto END;

NEXT2:;

//回転作業

for (int j = 0 ; j < check ; j++) {

int temp = input[j].first;

input[j].first = input[j].second;

input[j].second = -temp;

}

}

END:;

}

cout << "+++++" << endl;

}

return 0;

}

#include <iostream>

#include <vector>

#include <map>

#include <string>

using namespace std;

vector<pair<string , int> > treasure;

int main(void)

{

while (1) {

int n , m;

cin >> n >> m;

if (n == 0 && m == 0) {

break;

}

treasure.clear();

for (int i = 0 ; i < n ; i++) {

pair<string , int> temp;

cin >> temp.first >> temp.second;

treasure.push_back(temp);

}

int result = 0;

string have;

for (int i = 0 ; i < m ; i++) {

cin >> have;

for (int j = 0 ; j < n ; j++) {

for (int k = 0 ; k < treasure[j].first.size() ; k++) {

if (treasure[j].first[k] == '*') {

continue;

}

if (treasure[j].first[k] != have[k]) {

goto NEXT;

}

}

result += treasure[j].second;

break;

NEXT:;

}

}

cout << result << endl;

}

return 0;

}

#include

#include

using namespace std;

vectorreal;

int main(void)

{

int N , A , B , C , X;

while (1) {

cin >> N >> A >> B >> C >> X;

if (N == 0 && A == 0 && B == 0 && C == 0 && X == 0) {

break;

}

real.clear();

for (int i = 0 ; i < N ; i++) {

int temp;

cin >> temp;

real.push_back(temp);

}

int count = 0;

for (int i = 0 ; i < real.size() ;) {

if (X == real[i]) {

i++;

if (i == real.size()) {

break;

}

}

X = (A * X + B) % C;

count++;

if (count > 10000) {

count = -1;

break;

}

}

cout << count << endl;

}

return 0;

}